Overview Contest Details Rules Prizes Equipment Applications Travel Support

Overview

Automated item picking is an important skill for many robot applications including warehousing, manufacturing, and service robotics. Generalized picking in cluttered environments requires the successful integration of many challenging subtasks, such as; object recognition, pose recognition, grasp planning, compliant manipulation, motion planning, task planning, task execution, and error detection and recovery.

In an automated Amazon warehouse, thousands of mobile robots move 1-meter square shelving units from storage locations to picking stations and then back to storage. Associates stand in the stations and pick items off the shelves and put them into boxes that are shipped to customers. A typical bin on a shelf will have up to 10 items in it--often packed tightly together--and the associate is able to identify the right product, pick it out of the bin, scan the barcode for verification, and put the item in a box, all in only a few seconds. A typical Amazon warehouse holds several million different products. While many of these items are regularly shaped, like books and DVDs, there are also teddy bears, children's necklaces, vacuum-packed USB sticks, and thousands and thousands of other shapes, sizes, and materials.

The first Amazon Picking Challenge poses a simplified version of the picking task. Contestants' robots will be placed in front of a stationary Kiva shelf. The contest shelf will be relatively lightly populated, with many of the bins holding only a single item, and a few holding multiple copies of the same item. A few bins may hold multiple different items. The products will range in shape and size. Some will be solid cuboids, but some will be items that are pliable and/or harder to grasp.

Competition shelves and products (from Amazon, naturally) will be sent to qualifying entrants. Contestants will be allowed to use any reasonable type of robot they can bring to the competition. It is not a requirement that the robots be humanoid. The robot can be stationary, but once given the challenge data, must be autonomous. For those contestants that can't bring a robot, we are willing to work with companies to make robots available at the competition as a base platform. The contestants will be scored based on how many of the specified subset of items they pick within a specified time. Contestants will lose points for picking the wrong items, dropping items, or breaking items.

This event should be attractive to members of ICRA and the general robotics community because it focuses several longstanding technical challenges on a single, clear industrial application. Moreover, advances in generalized picking will be directly applicable to other domains, like service robotics, in which robots must deal with a large number of common household items; picking an item in a warehouse is essentially the same as picking a book off a shelf in a home.

Contest Details

Prior to ICRA 2015 each team will be responsible for coordinating transportation of their system to Seattle, WA. The contest organizers and ICRA challenge committee will assist with shipping details. A setup bullpen will be arranged for teams to prepare their systems, practice, and demonstrate their work to the wider ICRA audience. Prior to each official attempt, the team will be given time to set up their system in front of the test shelf. At the start of the attempt the team will be given their work order and shelf contents via the pre-defined Contest Interface. The work order and shelf content data will contain all the items that must be picked, along with a list of the other contents of the shelf bins. The robot will then be responsible for pulling as many of the items as possible out of the shelf bins and setting them down inside the robot area within the allotted time. Points will be awarded for successfully picked items, and deducted for dropped, damaged, or incorrectly picked items.

Shelving System

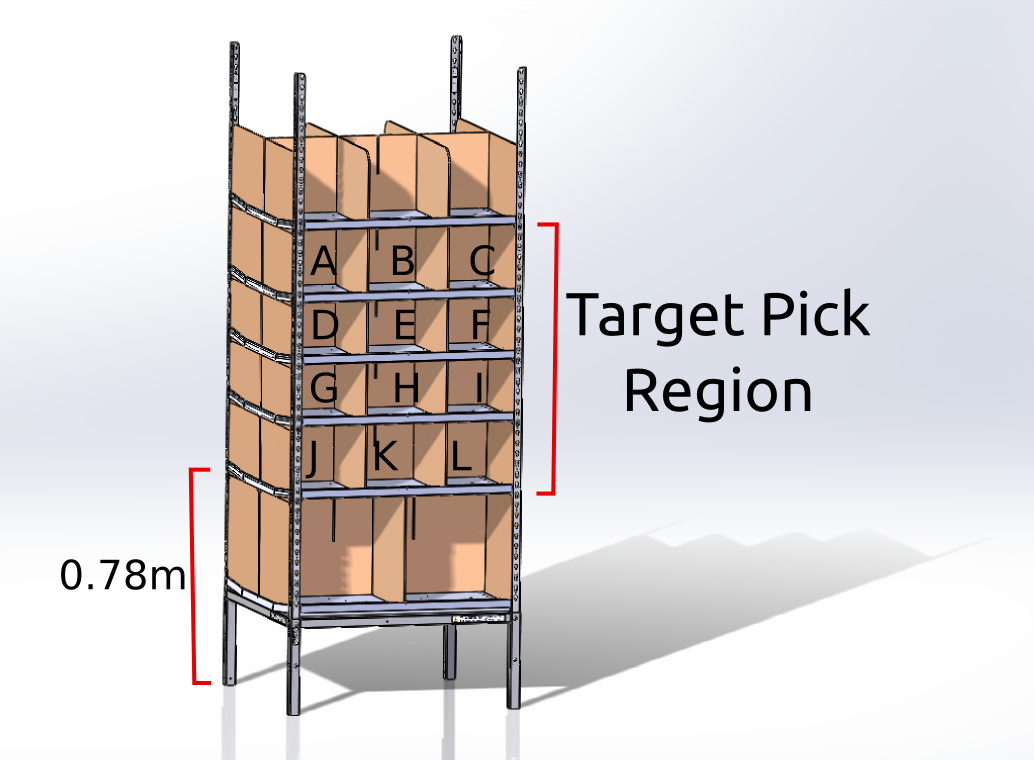

The shelving system will be a steel and cardboard structure seen in the CAD model below. A STL and Gazebo model of the shelf is available for download here, as well as a physical copy sent to teams awarded practice equipment. Only a single face of the shelf will be presented to the teams, but the stocking of the items will be changed pseudo-randomly between attempts. Only a subset of 12 bins on the shelf face will be used. The subset is a patch of the shelf face covering bins inside a roughly 1 meter x 1 meter area. The base of the first shelf from the floor is at a height of approximately 0.78 meters. Each bin will contain one or more items. A single item in each bin will be designated as a target item to be picked. Each bin is labeled (A-Z) and referred to in the Contest Interface below. Teams will not be allowed to modify the shelf in any way prior to the competition (or damage it during their attempt).

Instructions for assemblign the shelf kit sent to challengers can be found here: https://www.scribd.com/doc/254585504/APC-Shelf-Assembly-Instructions

Items

The set of potential items that will be stocked inside the bins is shown in the graphic below. A list of all the items can be found here. Note that the images on the Amazon shopping website are not necessarily representative of the form the item will be in at the contest. The graphic below shows the proper form (including things like packaging). The contest shelf may contain all the announced items, or a partial subset of them. Teams awarded practice equipment will be provided a set of the contest items. All items the teams are required to pick from the system will be a subset of this set. All items will be sized and located such that they could be picked by a person of average height (170 cm) with one hand.

The Amazon Picking Challenge team at Berkeley has provided a wealth of 2D and 3D data on the contest items by running them through the scanning system described in their ICRA 2014 work. Detailed models and raw data can be downloaded at:

Bin Stocking

For each attempt the bin location of the items will be changed. There will be bins that contain a single item, and some that contain multiple items. Each bin will have only a single item that is a pick target. The bin contents will be fully described by the contest Interface (below). The orientation of items within the bin will be randomized (e.g. a book may be lying flat, standing vertically, or have its spine facing inward or outward). Items will not occlude one another when looking from a vantage point directly in front of the bin. Contestants can assume the following rough breakdown of bin contents:

- Single-item bins: At least two bins will only contain one item (single-item bins). Both these items will be picking targets.

- Double-item bins: At least two bins will contain only two items (double-item bins). One item from each of these bins will be a picking target.

- Multi-item bins: At least two bins will contain three or more items (multi-item bins). One item from each of these bins will be a picking target.

Duplicate items may be encountered. In the event that an item appears in the work order for a bin that contains two of the same item in it either item can be picked (but not both).

Workcell Layout

The shelf will be placed in a fixed known stationary position prior to the start of the attempt and the team allowed to perform any calibration and alignment steps prior to the start to their timed attempt (within the reasonable bounds of the contest setup time). The precise alignment of the shelf with respect to the robot area will be subject to slight deviations for natural reasons (and not intentionally mis-aligned by the contest organizers). The team is not allowed to move or permanently modify the shelf. If a team translates the base of the shelf by more than 12 cm the attempt will be stopped. An overhead view of the workcell layout can be seen in the diagram below.

The team is allowed to place their robot anywhere within the predefined robot workcell, but must have a starting gap of at least 10 cm from the shelf. The robot should be kept within the 2 meter X 2 meter square robot workcell for practical set-up & tear-down purposes at the competition, but exceptions will be considered for larger systems. Each robot must have an emergency-stop button that halts to motion of the robot. 110 Volt US standard power will be provided in the robot workcell.

Items need to be moved from the shelf into a container in the robot workcell referred to as the order bin. Teams may put the order bin anywhere they prefer. The order bin is a plastic tote available from the company U-Line (http://www.uline.com/Product/Detail/S-19473R/Totes-Plastic-Storage-Boxes/Heavy-Duty-Stack-and-Nest-Containers-Red-24-x-15-x-8?model=S-19473R&RootChecked=yes). Tables and other structures to support the bin can be brought with the team equipment. Several table options will be provided on-site by the organizers. The robot must not be supporting the order bin at the beginning or end of your challenge period, but it is allowed to pick up and move the bin. If you end your run while still supporting the bin, your team will receive only half the points of the items in the bin as your final score. If you drop the bin, it will count as dropping all of the items in the bin. If a team has a question as to what might constitute 'supporting' the bin, or other related questions, contact the mailing-list or committee and we can clarify for your situation. Accidentally moving the order bin within the robot workcell will not be penalized. Moving the order bin outside the workcell will result in no points being awarded for the objects in the bin.

Contest Interface

The contest Interface will consist of a single JSON file handed out to each time prior to the start of their attempt that defines two things:

- The item contents of all bins on the shelf face

- The work order detailing what item should be picked from each bin

An example JSON can be found here: apc.json. If you are interested in checking the validity of a JSON file you have made yourself you can try the script: interface_test.py. If you would like a script that can generate various random valid interface files try the script: interface_generator.py

Rules

The first day of the contest will be used for practice attempts. Teams will be scored during practice, but the outcome will not count toward prize evaluations. A schedule for scored attempts will be distributed prior to the contest.

An attempt is defined as a single scored run. The attempt starts when the team is given the Contest Interface file, and the attempt ends when one of the following conditions is met: the 20 minute time limit expires, the leader of the team verbally declares the run is complete, or a human intervenes (either remotely or physically) with the robot or shelf.

One hour prior to the start of an attempt the team will be given access to setup their system at the contest shelf. Ten minutes prior to the start of the attempt the organizers will obscure the contestants' view of the shelf and randomize the items on the shelf. Then, (2 minutes prior to the start of the attempt) the organizers will give the team a JSON file containing the shelf layout and work order as described in the Interface. The team will then be allowed to upload the data to their system, after which the shelf will be unveiled and the attempt started. Items that appear in the work order can be picked in any order.

No human interaction (remote or physical) is allowed with the robot after uploading the work order and starting the robot. Note that this precludes any teleoperation or semi-autonomous user input to the robot. Human intervention will end the attempt, and the score will be recorded as the score prior to the intervention. Each team will have a maximum time limit of 20 minutes to complete the work order. The team is free to declare the attempt over at any time prior to the end of the 20 minute period. The number of attempts each team is allowed will be based on the number of participants (and decided prior to the contest). In the event teams are allowed multiple attempts the highest score from each team will be used to determine the contest outcome.

Robots that are designed to intentionally damage items or their packaging (such as piercing or crushing) will be disqualified from the contest. Questionable designs should be cleared with the contest committee prior to the competition.

Scoring

Points will be awarded for each target item removed from the shelf, and points will be subtracted for all penalties listed below. The points awarded vary based on the difficulty of the pick.

| Moving a target item from a multi-item shelf bin into the order bin | +20 points |

| Moving a target item from a double-item shelf bin into the order bin | +15 points |

| Moving a target item from a single-item shelf bin into the order bin | +10 points |

| Target Object Bonus | +(0 to 3) points |

| Moving a non-target item out of a shelf bin (and not replacing it in the same bin) | -12 points |

| Damaging any item or packaging | -5 points |

| Dropping a target item from a height above 0.3 meters | -3 points |

Moving a target item from a multi-item shelf bin into the order bin: A target object picked from a shelf bin that contains 3 or more total items. The item must be moved into the order bin.

Moving a target item from a double-item shelf bin into the order bin: A target object picked from a shelf bin that contains 2 total items. The item must be moved into the order bin.

Moving a target item from a single-item shelf bin into the order bin: A target object picked from a bin that contains only that item. The item must be moved into the order bin.

Target Object Bonus: An added point bonus uniquely specified for each different object. The bonus points are added to the team's score for each target object that is successfully put in the order bin..

Moving a non-target item out of a bin (and not replacing it): A penalty is assigned for each item removed (or knocked out of) the shelf that is not a target item. If the item is placed back on the shelf in the same bin (by the robot) there is no penalty.

Damaging any item or packaging: A penalty is assigned for each item that is damaged (both target and non-target items). Damaging will be assessed by the judges. An item can be considered damaged if a customer would not be likely to accept the item if it was delivered in that condition.

Dropping a target item from a height above 0.3 meters: A penalty is assigned for an item dropped from too high a height. Note that this only applies to target items.

For example: A team successfully picks two items out of single-item bins (+10 +10), and one item out of a multi-item bin (+20). The first object was worth +0 bonus points, the second worth +3, and the third worth +1. However, in the process of picking they knock another item out of its bin (-12) and it falls to the floor. Also, when they go to place their first item down in the robot workspace it slips from the hand 0.5 meters from the ground (-3). This would result in the team having a total score of 29 points.

In the event of a point tie the team that ended their run first (the team with the shortest time) will be declared the winner. In the event that two teams are tied on points and time they will split the prize. The omission of time as a critical challenge metric is intentional. In this first year of the picking challenge we want to encourage participants to focus on picking as many items as possible, rather than picking a select few items as fast as possible.

Prizes

To motivate contestants to focus on the full end-to-end task several prizes will be awarded to those with the best performance (as judged by the contest Rules). In order to participate for the prize awards teams must register their participation and sign the contest agreement by the contest enrollment closure date. The top three teams will receive monetary prizes as listed below:

| Prize (USD) | Minimum Score for Full Prize | |

|---|---|---|

| 1st Place | $20,000.00* | 35 points |

| 2nd Place | $5,000.00* | 25 points |

| 3rd Place | $1,000.00* | 15 points |

* All monetary prizes are conditional on eligible teams completing the minimum score criteria for that prize as outlined below. In the event of a scoring and time draw the tied teams will split the prize for their position. Prizes will not be awarded to teams with scores less than or equal to 0 points.

Minimum Score Criteria

To obtain the full 1st - 3rd place prizes a team must meet a minimum score criteria. If a team does place in the prize pool but does not meet the minimum criteria for that prize they will be awarded half the amount of the prize for that place. For example, if a team places 1st in the competition, but does not meet the minimum 1st place score criteria, they will be awarded a $10,000 prize.

Practice Equipment Applications

Challenge equipment will be furnished to qualified teams that submit practice equipment applications. This includes:

- An official Kiva Pod (shelving unit)

- A set of practice inventory

All furnished equipment will be identical to that listed in the Rules and used at the contest venue. The team agrees to return the equipment after the challenge has ended.

Travel Support

Each team will be eligible for up to $6,000 (USD) of travel support for getting the team and equipment to ICRA 2015. Teams must be registered with the contest in order to apply for travel support. Applications for travel support will be due before the contest. Teams will be notified prior to the contest of their support status. If accepted for travel support the team will be notified of their maximum support budget. The team will then be reimbursed for valid expenditures relating to travel and competing in the contest up to their allowed budget amount. Valid expenses include:

- Air and ground travel expenses to and from ICRA

- Shipping expenses for the competition equipment

- Lodging expenses over the duration of ICRA

Travel support will not be affected by challenge performance. Support will be allocated based on need and distributed evenly amongst teams within the pre-allocated contest budget. Exceptions for other related expenses and additional funding will be made on a case-by-case basis if the need arises.